Embodied AI: How Genie 3 Brings Robots Closer to Reality

“For an AI to be truly general… it needs to understand the physical world. And that’s what’s critical for robotics.”

— Demis Hassabis, All-In Summit 2025

The Robotics Gap

Robots today can be impressive in labs but clumsy in homes and factories. They struggle not with computation but with context. They misjudge weight, drop slippery objects, collide with furniture. Their flaw is not a lack of motors or sensors — it’s that they don’t share our intuitive grasp of physics.

We know a glass might shatter if dropped. We know a ball will roll downhill. Robots, until now, have had to learn such truths the hard way, through millions of trials, or rely on programmers to hard-code brittle physics engines.

That’s where Genie 3 enters.

Worlds for Machines

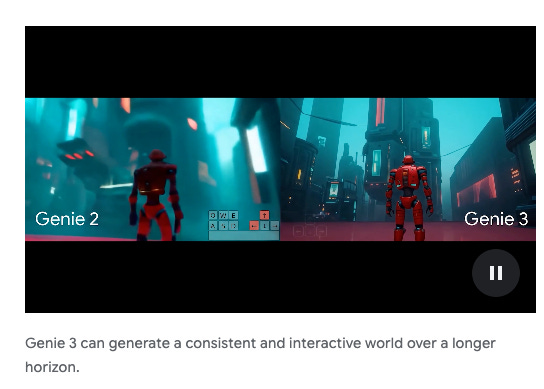

Genie 3 is not just a video generator. It’s a world model: an AI that creates interactive environments, pixel by pixel, in real time. Trained on millions of videos, Genie 3 has learned how things look, move, and persist.

Unlike Unity or Unreal, it doesn’t need a physics engine. Instead, it reverse-engineers intuitive physics from data. Paint a wall, walk away, return — the paint is still there. That persistence makes Genie 3 a potential training ground for embodied intelligence.

Demis Hassabis put it simply:

“This model is reverse engineering intuitive physics… it’s really, really mind-blowing.”

The Flight Simulator for Robots

Robotics researchers have long relied on simulation to accelerate learning — think of MuJoCo or Isaac Gym. But these environments are narrow, hand-designed, and often brittle when transferring to reality. Genie 3 offers something different: a broad, learned simulator that captures the messy richness of the world.

It’s the difference between teaching pilots with a fixed cockpit trainer versus a flight simulator that can generate any weather, any terrain, any emergency. Genie 3 could become the flight simulator for robots — letting them crash, spill, and misstep in synthetic worlds until their policies are robust enough for the real one.

Gemini Robotics + Genie 3

DeepMind has already begun building robotics models on top of Gemini, its flagship multimodal system. These models take natural language instructions — “put the yellow block in the red bucket” — and translate them directly into motor actions.

But robots trained only on text-to-action mappings miss context. They don’t understand the difference between a stable surface and a slippery one. Genie 3 provides the missing context: a synthetic environment where cause and effect can be experienced, not just described.

Think of it this way:

Gemini Robotics = the script (what to do).

Genie 3 = the stage (where and how the world responds).

Together, they make embodied AI more plausible.

Humanoids and Specialists

The robotics world is divided over form factor. Some argue humanoid robots are overhyped; others see them as inevitable. Hassabis sits in the middle.

“In industry, we’ll see specialized forms. But for everyday use, the humanoid form factor could be critical — because the world is designed for us.”

He’s right: factories may benefit from optimized arms or crawling bots. But in homes and offices, where steps, handles, and doorways are built for human bodies, humanoids may prove practical. Genie 3’s worlds could help both: giving specialized bots controlled training grounds and humanoids richer, more humanlike practice.

The Android OS for Robotics

Another tantalizing vision surfaced in the Summit conversation: the idea of a unifying software layer for robots, an “Android for robotics.”

Just as Android allowed thousands of different devices to flourish on a shared OS, a Genie 3–powered world model could underpin diverse robots. Companies could build hardware, but rely on the same embodied intelligence core.

Hassabis confirmed this is one of DeepMind’s strategies:

“That’s certainly one strategy we’re pursuing — a kind of Android play across robotics.”

If it works, proliferation could follow. Suddenly, startups could design robots without reinventing the intelligence stack, just as mobile hardware makers once rode Android’s wave.

The Near-Term Timeline

How soon? Hassabis’ forecast is striking:

“In the next couple of years there’ll be a real ‘wow moment’ with robotics.”

Not mass adoption, but a breakthrough that shows what’s possible when robots trained in Genie-like environments meet the real world.

Scale will take longer. Hardware needs to catch up. Factories need retooling. But Hassabis envisions millions of robots eventually helping society. The key will be timing: knowing when the software is robust enough to lock into mass production.

The Inflection Point

Genie 3 is not the final word on robotics. It can only sustain consistency for a few minutes. Its physics aren’t perfect. But it points in a direction robotics has been waiting for: a learned, flexible, richly interactive simulator where machines can practice being embodied.

Language taught AI to talk. World models may teach it to act.

If Hassabis is right, Genie 3 could be remembered less as a creative demo and more as the moment robotics gained its imagination.

Additional Material for Curious Minds:

Demis Hasabis at the All-In Podcast. September 12, 2025.

Google DeepMind introduces Genie 3 with implications for robotics simulation. Michelle Mooney. Robotics & Automation. August 8, 2025.

Tech Crunch. DeepMind thinks its new Genie 3 world model presents a stepping stone toward AGI. Rebecca Bellan. August 5, 2025.

👉 This concludes Part II of our crossover series. Part I in Deep Learning with the Wolf explored Genie 3 as a deep learning milestone. Here in Droids, we’ve seen its robotics implications. Together, they sketch a future where AI doesn’t just describe the world — it learns to live in it.