Welcome to the Dreamscape

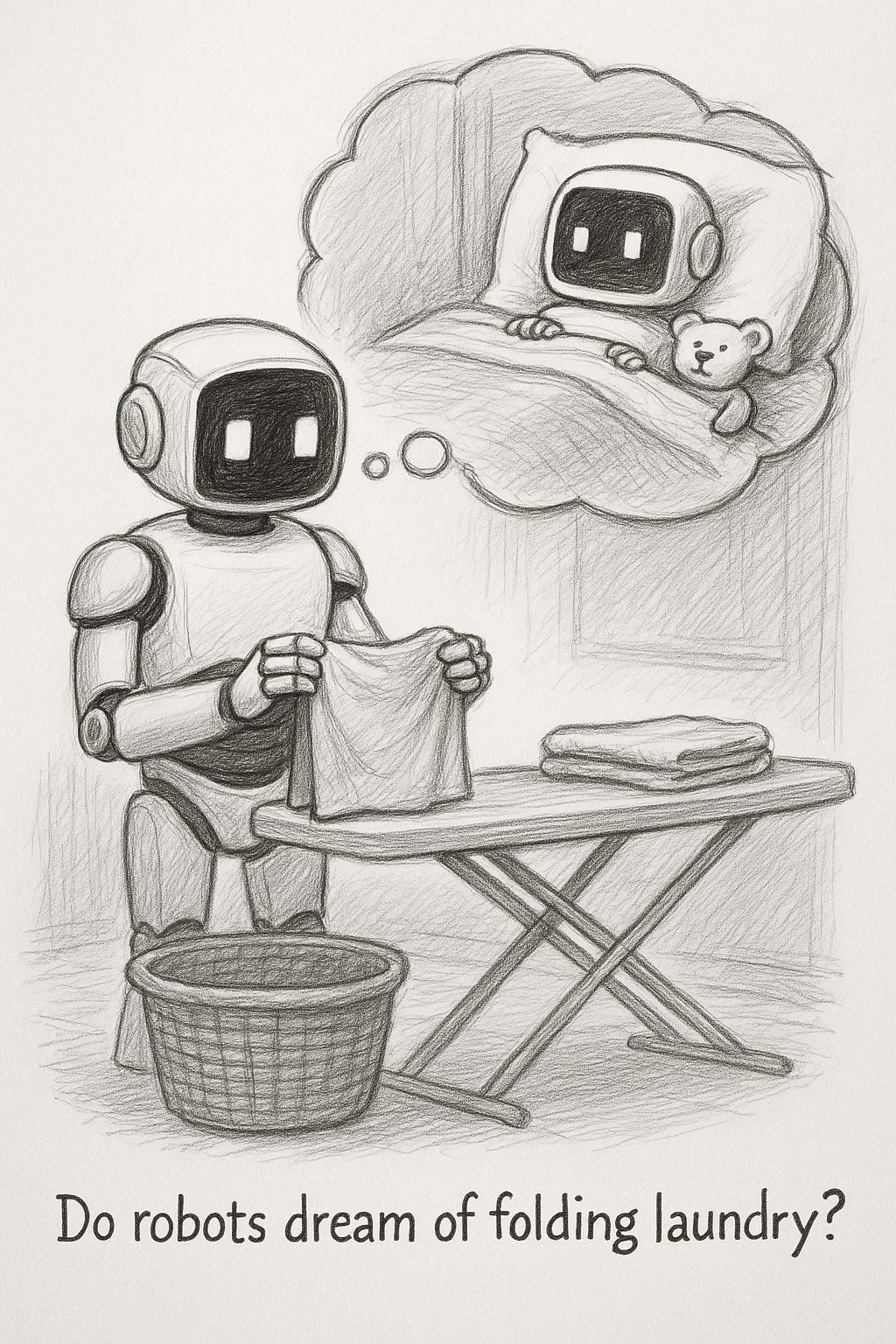

A small, wide-eyed robot closes its eyes. A glowing bubble floats above its head, filled with images of laundry, soft toys, and a robotic arm folding shirts in a sunlit bedroom. This isn't a Pixar short. It's a test project built using Google Veo, inspired by a very real breakthrough in robotic learning: NVIDIA's new DreamGen framework. (To clarify, I read a paper by Jim Fan about robots who dream of folding laundry and decided to try out Google Veo both in the same day. Hence, today, you have Google Veo videos of robots folding laundry while I talk about the Jim Fan paper. I’d like to clarify that these images were generated by me- and were in no way generated by NVIDIA.)

DreamGen is what happens when robotics meets imagination. Or more precisely, when foundation video models simulate millions of "what if" scenarios, giving robots access to experiences they’ve never actually lived. It's a kind of synthetic dreaming, and it just might redefine how machines learn physical tasks. As I discovered when I tried various prompts of robots folding laundry, I had a lot more failures than successes.

The Paper: Introducing DreamGen

Unveiled by Jim Fan and the NVIDIA GEAR team, DreamGen proposes a four-step recipe:

Fine-tune a SOTA (state-of-the-art) video model on your target robot.

Prompt it with language, asking how your robot would behave in imagined scenarios.

Recover pseudo-actions from those videos using inverse dynamics or latent action modeling.

Train a robot foundation model on this hallucination-turned-data, as if the robot had experienced it.

And the results? DreamGen took a robot trained only on a single task—pick-and-place—and enabled it to generalize to 22 new behaviors. That includes pouring, scooping, ironing, and hammering. With no human demos. No teleoperation. Just dreams.

Why It Matters

This is more than clever data augmentation. It's a shift in how we think about experience itself. Robots trained this way can generalize to unseen verbs and objects. In internal tests, success rates jumped from zero to over 43% on new verbs. For unseen environments, performance went from 0% to 28%.

In other words: the robot imagined folding a towel—then actually did it.

The Veo Test: A Dream of Laundry

Curious how this might look from the robot’s perspective, I turned to Google Veo and Flow. Still early days in terms of interface polish, but the potential is unmistakable.

I started with a simple prompt: a robot tucked in bed, dreaming about folding laundry. The results were mixed. Some of my earliest attempts spun into dystopian robot nightmares. Yikes! What was this? Is this robot pregnant and spawning another robot?

And, in my second attempt, the robots rebelled against their overlords and started to shred the laundry. Don’t laugh. This is how the robot rebellion will happen.

But then came my first “wins”— the videos I included up above where the robot— watched over by his faithful pal teddy bear- learns to fold towels.

It’s not technically DreamGen—but it captures the same core idea in a way that’s instantly understandable. Watching a robot dream about folding laundry is a visual stand-in for something much bigger: the ability to learn from imagined experience.

DreamGen is doing something profound. It’s giving robots a way to simulate tasks they’ve never been shown, to fill in the gaps with plausible futures, and to act on that learning. That’s hard to explain in code—but it’s easy to see in a dream sequence.

This little short isn’t just cute. It makes the concept real. You watch it, and you get it: the robot didn’t have to be taught everything. It imagined the task first.

The Takeaway

DreamGen is part of a bigger shift in robotics and AI: toward training paradigms that prioritize imagination, simulation, and low-cost data generation over fleets of human operators. It’s also deeply visual—making it the perfect muse for tools like Google Veo.

And if you happen to generate a robot dream sequence that includes the robot folding itself into a drawer and humming a lullaby? Just go with it. These models are dreaming, too.

The podcast was generated using NotebookLM and reviewed for accuracy.

🎙️ PODCAST NOTE:

If you’re wondering what “GEAR” means, don’t worry, I had to look it up too. GEAR stands for Generalist Embodied Agent Research, and it’s a division within NVIDIA Research focused on building robots that can generalize, meaning they don’t just memorize tasks; they adapt to new ones. So when the male AI podcasters say “GEAR Research,” he is referring to the folks at NVIDIA working on some of the most advanced robotic learning systems out there.

Additional Resources For Curious Humans:

#AI #Robotics #DreamGen #RobotLearning #TextToVideo #FoundationModels #NVIDIA #PhysicalAI #SyntheticData #MachineLearning #RobotDreams #DROIDS #JimFan #DreamGenpaper #thedroidsnewsletter

Share this post